Despite their many capabilities, Large Language Models (LLMs) have a serious limitation: they’re stuck in time and their knowledge is limited to the data they have been trained on.

Updating the knowledge of an LLM can take two forms: fine-tuning, which we will address in a future post, and the ever-present RAG. RAG, short for Retrieval Augmented Generation, has garnered a lot of attention in the GenAI community and for good reasons. You “simply” hook the LLM up to your documents (more on that later), and it can suddenly tackle any question, as long as the answer is somewhere in the documents.

This is almost too good to be true: it offers endless possibilities, a simple concept and, thanks to advances in the tooling ecosystem, a straightforward implementation. It is hard to imagine at first sight how it could go wrong.

Yet wrong it goes, and we have seen it happen consistently with our chatbots, as well as SaaS products that we have tested.

In this article, the first of a series on evaluation in LLMs, we will unpack how retrieval impacts the performance of RAG systems, why we need systematic evaluation and what the different schools and frameworks of evaluation are. If you’ve been wondering about evaluating your own RAG system and needed an introduction, look no further.

The perfect RAG assumptions

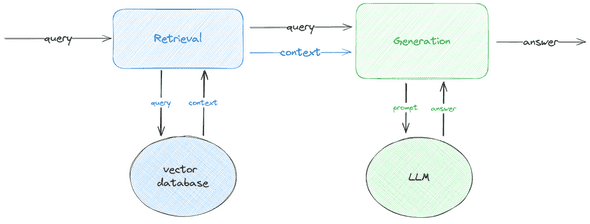

Simply put, a RAG system retrieves documents similar to your query and uses them to generate a response (see Figure 1).

For this to work perfectly, the following assumptions should hold:

- In retrieval, you need to retrieve relevant data, all the relevant data and nothing but the relevant data.

- In generation, the LLM should know enough about the topic to synthesize retrieved documents, yet be capable of changing its knowledge when confronted with conflicting or updated evidence.

Good retrieval is vital for a good RAG system. If you feed garbage into your LLM, you should not be too surprised when it spouts garbage back at you. But good retrieval becomes even more essential when using smaller LLMs. These models are not always the best at identifying and filtering irrelevant context.

Retrieval can indeed be one of the weakest parts of a RAG system. Despite the hype around vector databases and semantic search, the problem of knowledge indexing is still far from being solved.

Retrieval, semantic search and everything in between

Because the context an LLM can take is limited, stuffing your whole knowledge base in a prompt is not an option. Even if it were, LLMs are not as good with extracting information from a long piece of text as they are with shorter contexts. This is why retrieval is needed to find the documents that are most relevant to your query.

While this can be done with good old keyword search, semantic search is becoming increasingly the norm for RAG applications. This makes sense. Suppose you ask, “Why do we need search in RAGs?” Despite the absence of the exact words from the query, semantic search may be able to find the previous paragraph as it is semantically aligned with the query. On the other hand, keyword search will fail as neither “search” nor “RAG” are in the text.

In practice, the process is a bit more involved:

- Documents in a knowledge base are divided into smaller chunks.

- An embedding model is used to “vectorize” these chunks.

- These vectors are indexed into a vector database.

Upon receiving a query:

- The query is vectorized using the same embedding model.

- The closest vectors are retrieved from the vector database.

Experiments vs. Eyeballing: or why do we need evaluation anyway?

We’ve been to so many demos and presentations where questions about evaluation were answered with a variation of “evaluation is on our future agenda” or “we changed the [prompt|chain|model|temperature] until the answer looked good”1 that we internally coined a term for this: eyeballing™.

When performing “eyeballing”, the most probable scenario is that someone, likely the engineer working on the RAG app, tested the app with some queries. For one or more of those, the generated answer was subpar. The engineer randomly debugs these cases, and finds one or more of the following problems:

- Retrieved references are not relevant to the query.

- The answer is not truthful to the retrieved content.

- The answer does not address the question.

The engineer changes something in the implementation, and now the answer looks better (for some, most probably vague definition of the notion of better).

There are many problems with this approach:

- No benchmark: There is no guarantee that the introduced change did not degrade performance on other questions.

- No experiment tracking: Likely none of the intermediate states were committed or properly tracked. So we don’t know what combinations of parameters were tested.

- No evaluation metrics: In the absence of an evaluation framework that defines the notion of “better”, we cannot numerically compare the current RAG state to any other possible state.

The closest software engineering metaphor to the eyeballing approach is manually testing every change applied to the code without having a proper test suite.

The two schools of evaluation: human vs. machine

By now it should be clear why evaluation of RAG systems is a must. The question has been approached from various angles and with different evaluation metrics and strategies. We can distinguish, however, a division along the line of whether the evaluator, or the oracle (as it’s typically referred to in Machine Learning and Expert Systems), is a human or an LLM.

- In human-based evaluation, a human labeler rates the relevance of retrieved documents, either repeatedly (for every experimental setting), or as a one-off, by creating a benchmark of queries and associated relevant documents.

- In LLM-based evaluation, it is an LLM, usually one that is powerful enough, that evaluates if and how the retrieved content is relevant to a query.

Building a benchmark

Note that in both cases, you need a benchmark to evaluate the RAG against. With LLM-based evaluation, this is usually a set of queries over the documents database. In human-based evaluation, benchmarks can be more elaborate (more on that further below).

Building a useful benchmark is not an easy task. One should balance the types of queries asked, their statistical incidence over the database and the value in catering to a specific subset of queries as opposed to doing a good job over all queries. Exploring these considerations is beyond the scope of this post.

Human-based evaluation

Human-based evaluation is closer to the evaluation paradigm in classic Machine

Learning. One can easily apply evaluation metrics originally devised for

Information Retrieval. These should be adapted to the RAG retrieval setting

where only the k top documents are passed on to the LLM as context and

the order in which these documents are retrieved is not relevant. Instead of raw

recall and precision, we should instead think of those as a function of k.

A higher precision at k means less noise is mixed with the signal, while a

higher recall means that more relevant information is retrieved. Since k is

fixed, these should go hand in hand.

Besides using k as a threshold, we can also consider other parameters such as

the threshold of similarity between the query and retrieved documents.

Note that despite this approach being more demanding in time, automation of evaluation is still possible once a one-off benchmark is created and evaluation metrics are defined.

LLM-based evaluation

LLM-based evaluation is easier to set-up and automate since it does not require any human involvement beyond the creation of a benchmark of queries. This is the core of the RAGAS and TruLens evaluation frameworks that we will discuss below.

TruLens

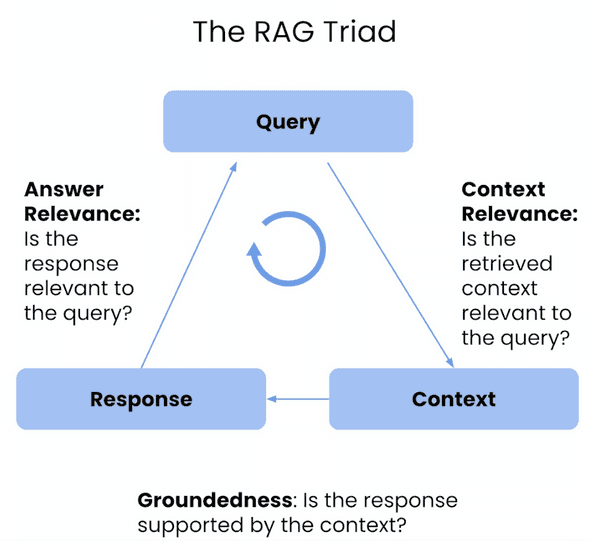

TruLens defines a golden triad of RAG evaluation (see Figure 3). Let’s discuss in particular retrieval relevance. The idea is to quantify how much the retrieved content is relevant to the query by computing the ratio of relevant to total sentences in the retrieved documents. It is an LLM that determines whether a sentence is needed to answer a query.

RAGAS

RAGAS defines an evaluation matrix over both retrieval and generation, two of those are retrieval evaluation metrics, namely: context relevancy (which is similar to the one defined by TruLens) and context recall.

Context recall is defined as the ratio of statements in the retrieved documents out of the statements in a “model” answer. This model answer should be provided in a “human”-crafted ground truth and the approach is therefore a hybrid human-LLM one. An LLM is responsible for extracting and comparing statements from the retrieved context and the model answer.

Limitations of LLM-based evaluation

A fundamental unspoken assumption that underlies using LLMs to evaluate retrieval is that the LLM knows enough about the question and the context to make a judgement on their relevance. This assumption is hard to justify in the context e.g. of fairly technical documentation that the model has not seen before or in a subject the model is not fluent in.

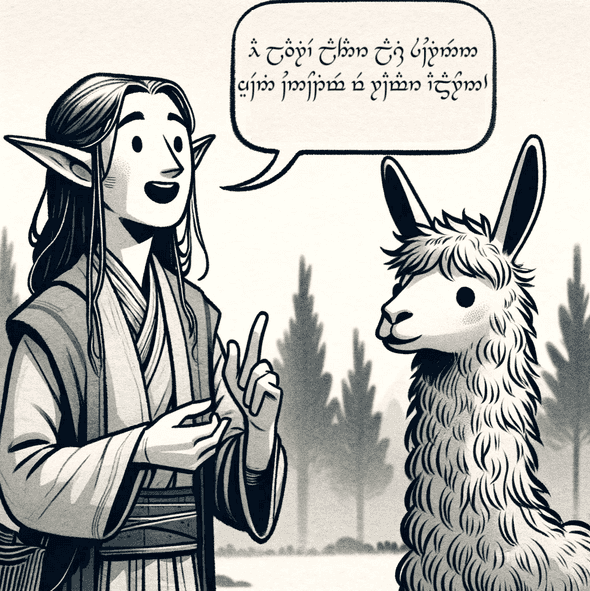

Take the following passage written in Quenya, a fictional language Tolkien invented in Lord of the Rings:

Alcar i cala elenion ancalima. Varda Elentári, Tintallë, tiris ninqe eleni. Lórien omentieva Yavanna Kementári. Eärendil elenion ancalima, perina i oiolossë.2

And take this query:

Man enyalië Varda Elentári tiris eleni?3

Can you tell if the context is relevant to the query?

This is admittedly a constructed example, but we have seen similar cases in play while evaluating a chatbot over Bazel documentation.

This approach has the additional pitfall of not taking into account that, even if the retrieved context is relevant to the query, this does not measure recall: how many of the existing relevant documents are in the database, or how much information required to answer the question is retrieved. While the RAGAS recall metric attempts to mitigate this, crafting answers to fairly technical topics or those that require intimate knowledge of a domain or a knowledge base is both hard and time-consuming. It also does not take into account that the crafted answer might be correct without necessarily including all relevant bits of relevant information in a knowledge base.

Conclusion

The evaluation of RAG systems presents a unique sets of challenges but its value in building usable apps cannot be overstated.

The evaluation frameworks we discussed, both human-based and LLM-based, each have their own advantages and limitations. Human-based evaluations, while thorough and more trustworthy are labor-intensive and hard to repeat. LLM-based evaluations, on the other hand, are much more scalable and can easily be repeated but they rely heavily on LLMs, which have their own biases and limitations.

Stay tuned for the next post in this series, where we present our in-house evaluation framework and share insights and results from real-world cases.

- I (Nour) have been to a conference lately where someone said they were using RAGAS, and I had a hard time containing my excitement.↩

- “The glory of the light of the stars is brightest. Varda, Queen of the Stars, Kindler, watches over the sparkling stars. Lórien met Yavanna, Queen of the Earth. Eärendil, brightest of stars, sailed on the everlasting night.”↩

- “Who called Varda, the star queen, watcher of the stars?”↩